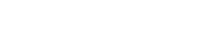

Deep Learning Embedded System

There are tremendous benefits brought by embedded system applications with deep learning models. Deep learning embedded systems have transformed businesses and organizations from every industry. Deep learning models can help automate industrial processes, make real-time analyses for decision making, and even forecasting early warnings. These AI embedded systems have proven to decrease cost and increase revenue in various industries including manufacturing plant, supply-chain management, health-care, and more. That said, this blog post provides everything you need to know about deep learning embedded systems as well as provides you with an excellent source for buying deep learning embedded computers.

How Are Deep Learning Models Created?

There are several stages involved in creating deep learning models from training, crafting, to preparing the models before get to run on the embedded systems at the edge. Training a deep learning model is the effort and time-intensive part of the process where the model is trained by feeding with various data sets that need time and heavy computing power. The goal of the training is to create an accurate model for the intended application. After a model is trained, then it will be going through an evaluation process and hyperparameter tuning, which is a process to basically improves the AI model accuracy. After a certain desired accuracy percentage is achieved, then the deep learning model can be prepared to do prediction, where it can finally get deployed with the embedded system at the edge for handling certain applications.

Why Run Deep Learning Model at the Edge?

In the beginning, deep learning models are run at the cloud, training the deep learning models in a huge data center with powerful computers. Before, devices at the edge are not powerful enough to train and run deep learning models. However, the explosions of powerful performance accelerators such as GPUs, VPUs, multi-core processors, NVMe SSDs, FPGAs, and ASICs have enabled the AI model to be run by embedded systems away from the cloud. By doing this, it will bring the solution closer to where the application is performed which provide various advantage and eliminates bottlenecks for AI applications.

Benefits of Running Deep Learning models at the edge:

One of the reasons for migrating data processing from the cloud to the edge is latency. Edge applications that run deep learning model at the cloud always requires internet connection 24/7 to be able to perform the task. Although, there is no guarantee for a constant and fast internet connection especially for remote locations that an internet connection might not even be available. This is can be a disaster for mission-critical applications that needs real-time decision making such as an autonomous vehicle.

Compared to the huge data centers that require a tremendous amount of power to run the computers and even more electricity needed to cool down the data servers, embedded systems are much more power-efficient. Deep learning fanless embedded systems utilizes passive cooling to cool down the internal components, which resulted in even lower power consumption that is great for cut down the towering electricity costs.

There is a bandwidth problem for constantly sending huge amounts of data to the cloud. That said, by migrating the deep learning algorithm right at the edge, embedded systems don’t need a constant internet connection and can analyze real-time situations right at the edge only sending important information to the cloud for further analysis. For instance, smart surveillance applications that run deep learning embedded applications at the edge can filter out security footage that is not important, and only send active footage that can be valuable information for the applications.

Eliminating the need to constantly send and receive data to/from the cloud reduces the risk for data breaches and personal information leaks that can jeopardize business operations. Moreover, deep learning industrial embedded systems are configured with TPM 2.0 for extra security that not only secures the data from a remote hacker, also encrypts information right at the hardware to counter data breach if physical theft occurs.

Computing at the edge requires less electricity which equals less carbon footprint for sustainability measures. Huge cloud data centers that run deep learning models demand plenty of electricity which results in excessive CO2 emissions that are very poor for the environments. Deep learning embedded systems with fanless design run with much less electricity especially without a fan, which yields a lot better solution sustainability.

Deep Learning At The Edge | Supporting Factors

1. Software: More Efficient AI Model

Previous deep learning models’ sizes and efficiency were much larger than today’s deep learning models. The smaller the size of the trained model, the less storage and compute power is required to run the trained model on an edge device. AI developers are capable of compressing and optimizing AI algorithms, making them exponentially smaller using various approaches such as pruning, weight sharing, quantization, Winogard transformation, and more. Thus, high-accuracy deep learning models with smaller storage space and less computing power make embedded systems a powerful solution at the edge.

2. Hardware: Powerful Accelerators

Some hardware mainly supports embedded systems to perform deep learning models at the edge. The configuration of the hardware accelerators fits with the way deep learning algorithms run their calculations and manage data. For example, industrial computers can be equipped with hardware accelerators that can perform AI workloads such as machine learning and deep learning much faster and more efficiently than relying on a system’s CPU. AI hardware accelerators include GPUs (graphics processing units), VPU (vision processing units), and FPGAs (field-programmable gate arrays).

Powerful GPU and VPU

GPUs or graphics processing units consists of thousands of cores that can compute a myriad of calculations all at once. This is a perfect fit for deep learning models that use complicated linear algebraic computations such as vector operations and matrix calculations. Thus, GPUs and VPUs are highly efficient to process a huge amount of calculations all at once. For instance, NVIDIA GPU with Tensor cores is absolutely powerful to compute deep learning algorithms.

Faster RAM and SSDs

Essentially RAM's role in deep learning computation is it contains the latest dataset from the SSDs that will be computed at the GPUs/VPUs. Now RAM has bigger storage and faster speed like the DDR4 SDRAM can transfer data to GPUs/VPUs really fast, which elevates deep learning algorithm performance. DDR4 SDRAM can reach up to 1600 MHz clock rate which is much faster than the previous generation of DDR3.

Similar case for SSDs, more storage, and faster speed. PCIe NVMe SSDs are extremely fast when compared to the previous SATA SSDs. NVMe SSDs reduce the bottlenecks that SATA SSDs previously have, where NVMe SSDs have a theoretical throughput speed of up to 4GBps.

3. Inference Analysis | Software and Hardware Combination

After deep learning model training is completed with a certain prediction accuracy, it is ready to be deployed at the edge on an embedded system to perform inference analysis. Deep learning inferencing refers to trained models that are capable of recognizing images and provide a vision for the machine. After AI models are trained, they are compressed and optimized for efficiency, making them ready for deployment on deep learning embedded systems. Deep learning embedded systems are configured with powerful performance accelerators including CPUs, GPUs, VPUs, FPGAs, SSDs, and rich I/O for their capabilities of receiving data from different sensors remotely and seamlessly run real-time deep learning inference analysis at the edge.

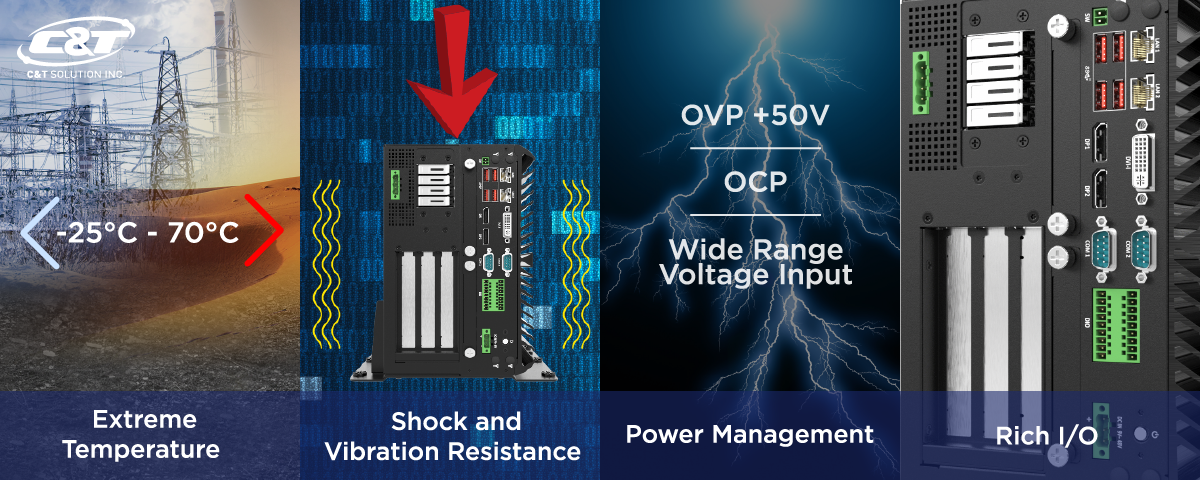

Deep Learning Rugged Embedded Sytems | FULL PROTECTION

Edge computers are often deployed in remote locations and exposed to extreme environmental challenges. Therefore, to completely guarantee your deep learning industrial computer performs reliably and optimally they are ruggedized to withstand exposure to a harsh condition that can jeopardize non-hardened systems. Standard embedded systems are not rugged enough to withstand extreme environments and can easily fail when deployed in industrial environments. Therefore, you need to make sure to deploy rugged deep learning embedded systems that are industrial grade and equipped with rich I/Os to support legacy and modern technologies while withstanding exposure to extreme temperatures, heavy shocks, constant vibrations, voltage shock, and more.